Research

-

Rational Inverse Reasoning

TL;DR: This paper introduces a framework for few-shot learning from demonstration by inferring the embodied reasoning process behind observed actions. On challenging reasoning tasks, our method achieves strong generalization to new tasks with only 1-3 demonstrations, moving closer towards human performance. -

Hierarchical Vision-Language Planning for Multi-Step Humanoid Manipulation

TL;DR: This work introduces a hierarchical and modular vision-language architecture for multi-step humanoid manipulation tasks. Specifically, we leverage vision-language models (VLMs) for high-level planning and action-chunking transformer (ACT) and whole-body RL for mid and low-level control. -

![Image for Investigating Vision Foundational Models for Tactile Representation Learning]()

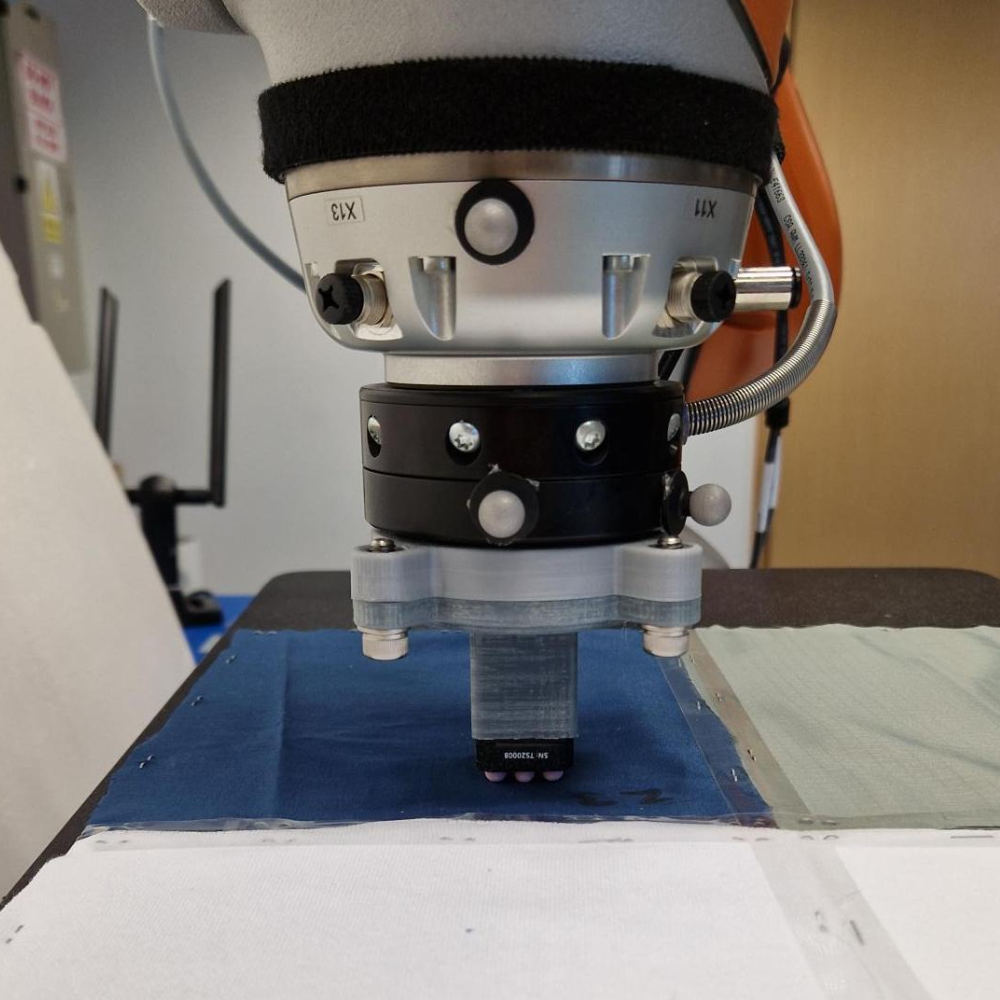

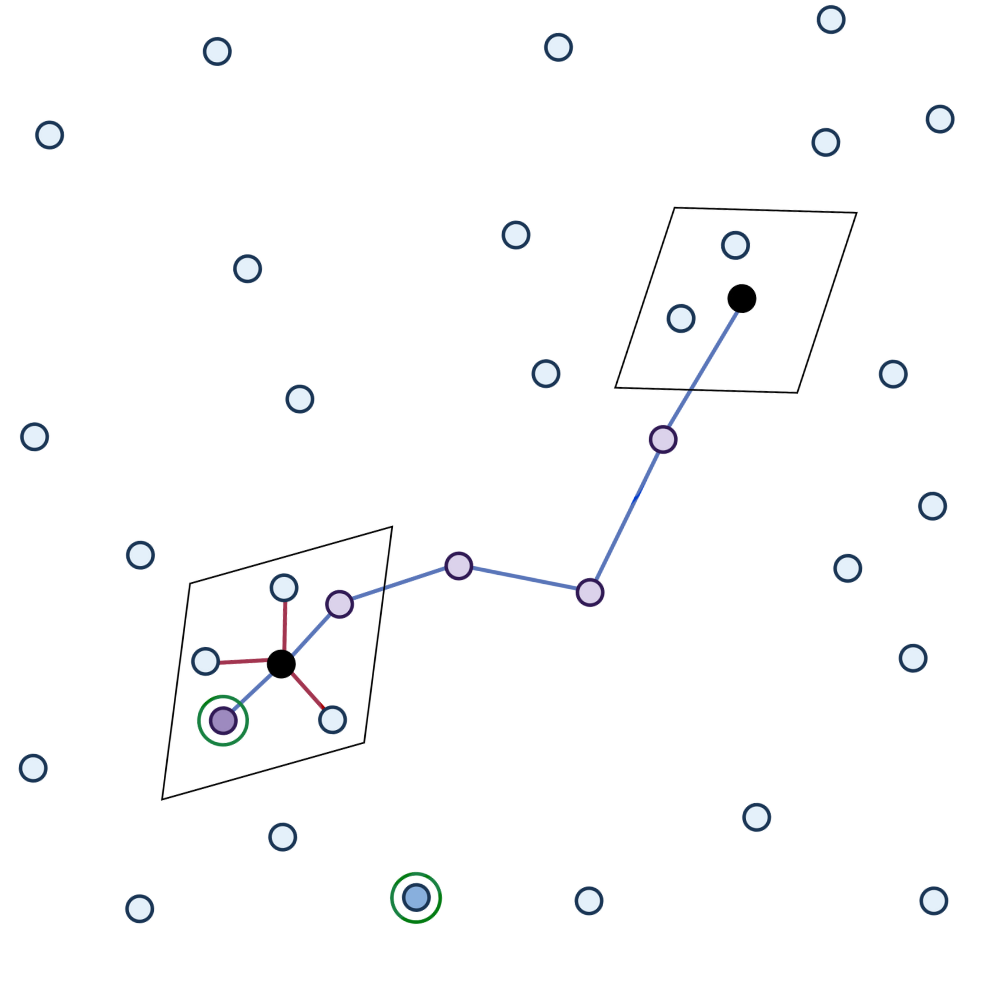

Investigating Vision Foundational Models for Tactile Representation Learning

TL;DR: This paper explores the use of vision foundational models and pre-trained representations to enhance tactile representation learning and multi-modal continual learning. -

![Image for Towards Optimal Compression: Joint Pruning and Quantization]()

Towards Optimal Compression: Joint Pruning and Quantization

TL;DR: This work presents a method for simultaneously pruning and quantizing neural networks by approximately minimizing the distance in parameter space. -

![Image for FIT: A Metric for Model Sensitivity]()

FIT: A Metric for Model Sensitivity

TL;DR: This paper introduces a Fisher Information Metric approximation method for model sensitivity to low-bit quantization.